Hemant Bhatt

Build complex autonomous agents with Vercel A.I SDK

Beyond basic LLMs

Want to create expert financial analysts that gather and process complex data on demand? Or perhaps you need sophisticated customer service systems that handle tickets, escalations and refunds without human intervention? AI agents can be your new go to solutions.

What Makes AI Agents Different?

Unlike basic Large Language Models, AI agents come equipped with two critical capabilities:

- Access to Tools: AI agents can interact with external systems, databases, and applications—essentially giving them hands to work with, not just a mouth to talk or chat.

- Autonomous Decision-Making: These agents can evaluate situations, determine appropriate actions, and execute them autonomously.

Whether you're analyzing market trends or processing customer returns, AI agents provide the specialized capabilities needed to handle complex workflows autonomously. They bridge the gap between simple text generation and truly functional automated systems.

How do i supercharge LLMs with autonomous decision making ability?

Vercel AI SDK's tool calling feature lets you equip your LLM with a toolkit of varied abilities. Think of it as handing your AI a utility belt—it can choose which tools to use when responding to requests.

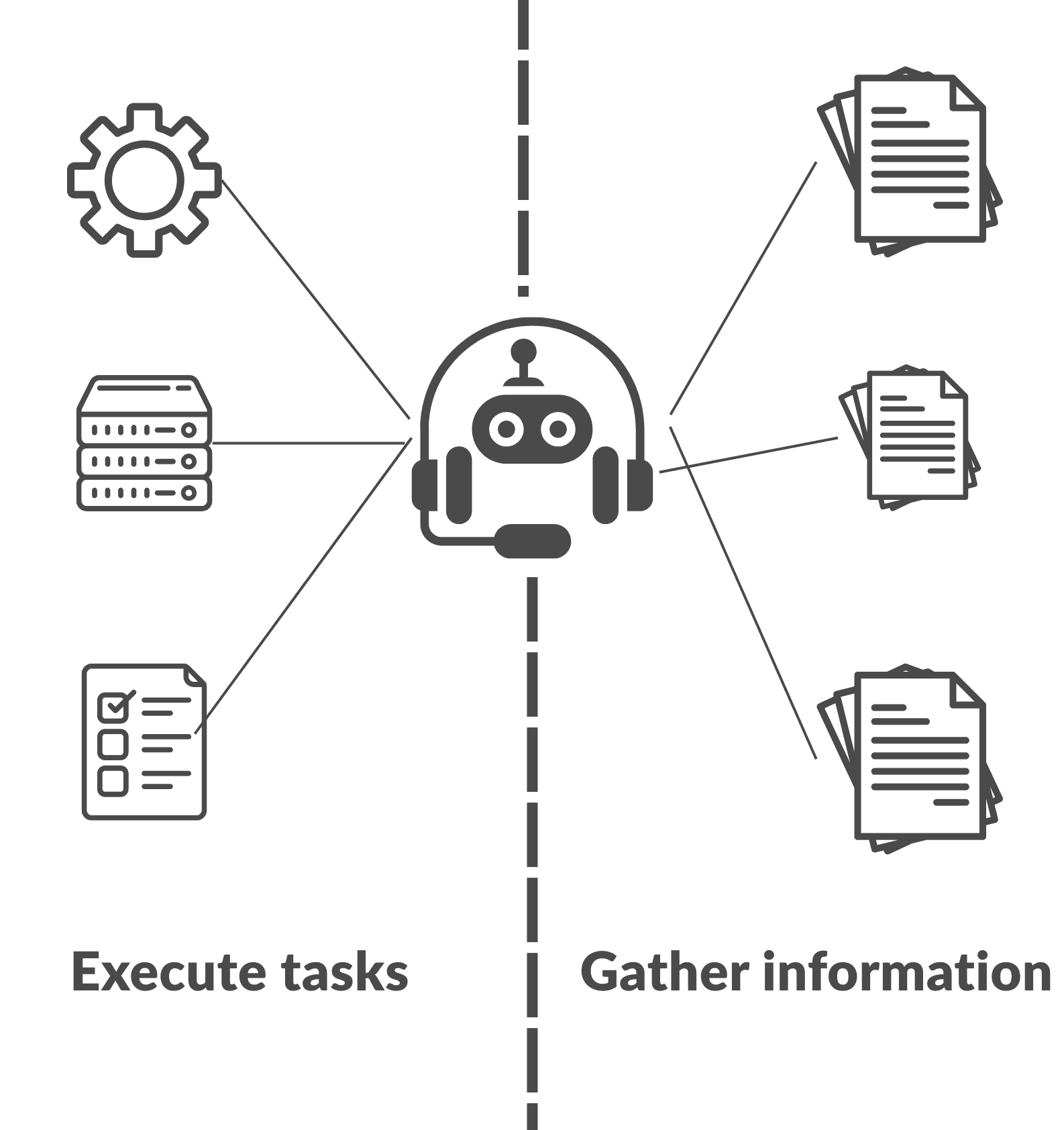

These tools usually fall into two categories:

- Information Gatherers - Pull data from external sources, like checking current stock prices or retrieving weather forecasts.

- Action Executors - Perform operations in other systems, such as sending emails or escalating support tickets.

The beauty is in the simplicity—you define the tools, and the AI decides when they're needed. Like having an assistant who knows when to hand you a coffee without being asked.

Working with tools

Lets get started. The ‘generateText’ is a simple LLM invocation function in Vercel A.I SDK. You can choose the LLM model you want to use and the prompt that you want to execute.

1 const result = await generateText({ 2 model: google("gemini-2.0-flash"), 3 prompt: "Write a brief description of the butterfly effect.", 4 });

Response:

1 text: "The butterfly effect is the idea that small, seemingly insignificant actions can have large and unpredictable consequences over time. 2 It's often used to illustrate the sensitivity of complex systems to initial conditions, suggesting that even a butterfly flapping its wings in Brazil could, 3 theoretically, set off a tornado in Texas.",

In the same generate text function, you also have the option to define tools. Tools are key value pairs inside the tools object. In the below example i have created a very very simple tool that lets the LLM calculate the sum of 2 numbers. Zod is used to validate the input to the tool.

1 const result = await generateText({ 2 model: google("gemini-2.0-flash"), 3 tools: { 4 add2Numbers: tool({ 5 description: "Add 2 numbers.", 6 parameters: z.object({ 7 number1: z 8 .number() 9 .describe("The first number whose sum is required"), 10 number2: z 11 .number() 12 .describe("The second number whose sum is required"), 13 }), 14 execute: async ({ number1, number2 }) => { 15 return number1 + number2; 16 }, 17 }), 18 }, 19 prompt: "What is 221804598 + 860948995", 20 maxSteps: 1, 21 });

1 Answer: '221804598 + 860948995 is 1082753593.'

From the most complex queries to the easiest mathematical tool, all of them will have the same definition format.

Controlling Tool Usage with maxSteps

The maxSteps parameter defaults to 1, which means "just talk, no tools." Let say that you have 5 tools and you want your LLM to use exactly one tool during its response. Set maxSteps to 2 and the process unfolds like this:

- Step 1: Pull data from external sources, like checking current stock prices or retrieving weather forecasts.

- Step 2: Once the tool returns its data, the LLM crafts your final response incorporating this newfound wisdom.

It's like giving your AI assistant permission to make exactly one phone call before answering your question. Now you can control the max steps to let the model use maximum n number of steps.

Let’s take a real world example. You want to create a customer service representative that can act a buffer between your customer and the service employees/Manager. This buffer will help you sort your queries faster and only genuine issues will come to your employees whose time is precious. Think of it as your digital bouncer—politely handling routine questions at the door while escorting the VIPs and emergencies straight to the right person. No more wasting your team's time explaining where the "forgot password" button is located for the fifteenth time today. What capabilities do you think you will give to your agent?

Lets list them:

- Ability to generate a ticket.

- Get the data of a ticket based on ticket ID.

- Escalate a ticket to an Employee

- Escalate a ticket to a Manager.

Lets now look at how the code would look like:

1 const result = await generateText({ 2 model: google("gemini-2.0-flash"), 3 tools: { 4 generateTicket: tool({ 5 description: "Generate a ticket for the user's query.", 6 parameters: z.object({ 7 ticketTitle: z 8 .string() 9 .describe("The title for the customer's issue."), 10 ticketDescription: z 11 .string() 12 .describe("The description for the customer's issue."), 13 }), 14 execute: async ({ ticketTitle, ticketDescription }) => { 15 const result = await raiseTicket(ticketTitle, ticketDescription); 16 return result; 17 }, 18 }), 19 escalateTicketToEmployee: tool({ 20 description: 21 "Based on the severity and duration of the ticket, escalate it to a cutomer service Employee.", 22 parameters: z.object({ 23 ticketId: z.string().describe("The unique ID of the ticket."), 24 }), 25 execute: async ({ ticketId }) => { 26 const price = await escalateIssue(ticketId, "employee"); 27 return price; 28 }, 29 }), 30 escalateTicketToManager: tool({ 31 description: 32 "Ticket with the highest severity should be escalated to the Manager.", 33 parameters: z.object({ 34 ticketId: z.string().describe("The unique ID of the issue."), 35 }), 36 execute: async ({ ticketId }) => { 37 const price = await escalateIssue(ticketId, "manager"); 38 return price; 39 }, 40 }), 41 getTicketDetails: tool({ 42 description: "Based on the ticketID, get the details of the ticket.", 43 parameters: z.object({ 44 ticketId: z.string().describe("The unique ID of the issue."), 45 }), 46 execute: async ({ ticketId }) => { 47 const price = await getTicketDetails(ticketId); 48 return price; 49 }, 50 }), 51 }, 52 prompt, 53 maxSteps: 4, 54 });

In summary, the code has 4 tools that we discussed before. On each cycle of text generation we are letting the LLM use at most 4 steps.

Conclusion:

Tool calling turns your AI from a conversational companion into an active problem-solver that can streamline workflows and automate mundane tasks with Vercel A.I SDK handling most of the logic and boilerplate.